Introducing IO#

IO is a new network proxy that puts application developers first.

IO makes application development easier and application deployments more secure. It handles communication challenges that developers get tired of facing over and over again and rarely have time to get right.

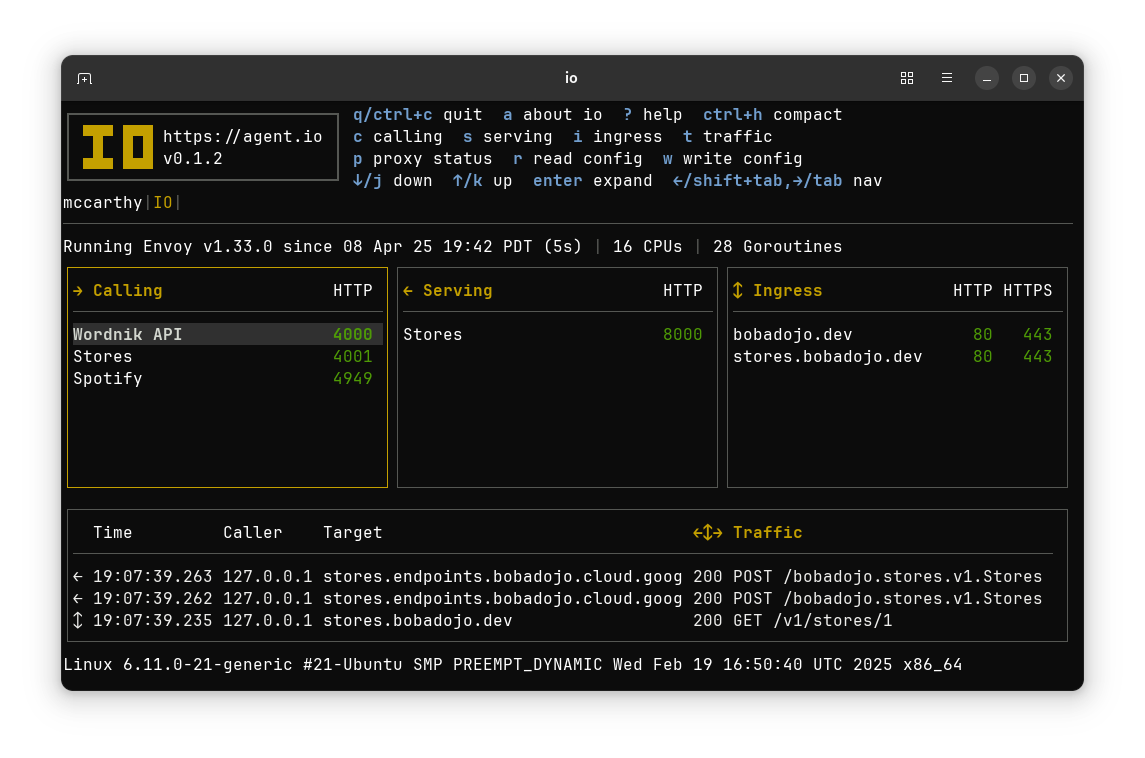

IO builds on Envoy, a high-performing and highly-configurable proxy used by tens of thousands of organizations and developers. But Envoy’s great power comes with great complexity. IO makes Envoy easy to use by running it behind a control surface that addresses three common needs:

- Calling Mode provides a forward proxy that applications can use to securely call remote APIs.

- Serving Mode provides a reverse proxy that observes and secures API calls to an application.

- Ingress Mode provides a TLS gateway that can be used to serve multiple backends with HTTPS.

All three of IO’s modes can be supported by a single IO instance or can be divided among any number of cooperating IOs.

Another way that IO is built for developers is that IO is interactive. IO’s terminal user interface (TUI) makes it easy to configure and observe IO both locally and remotely.

How IO Works#

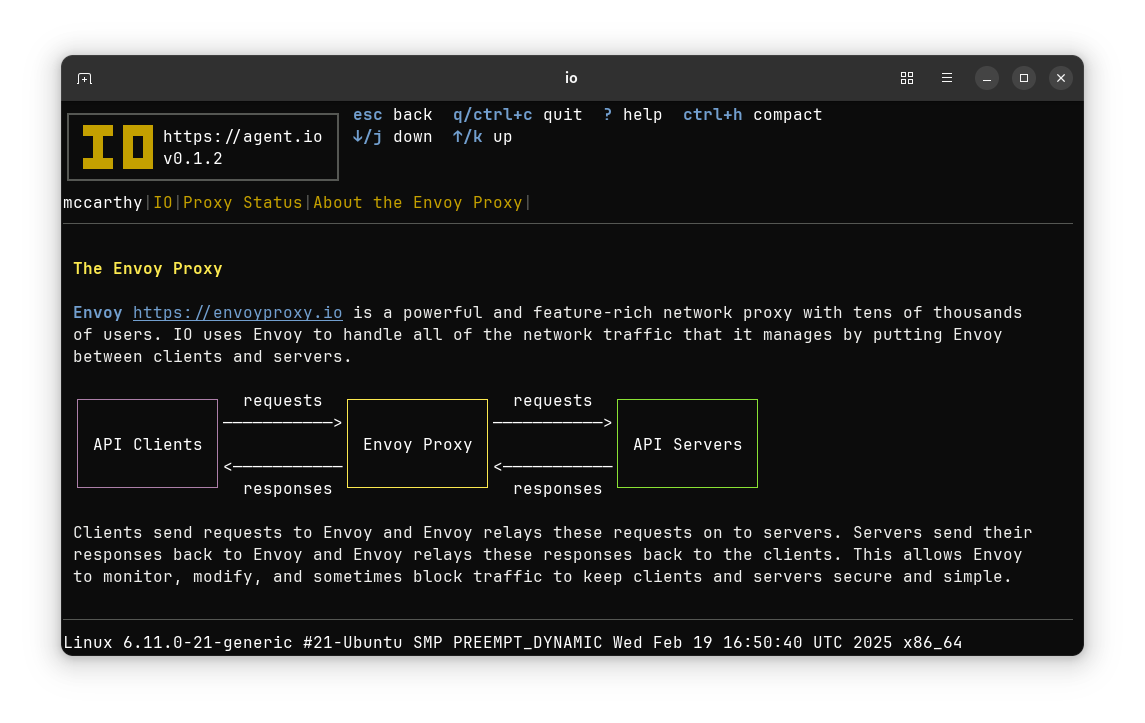

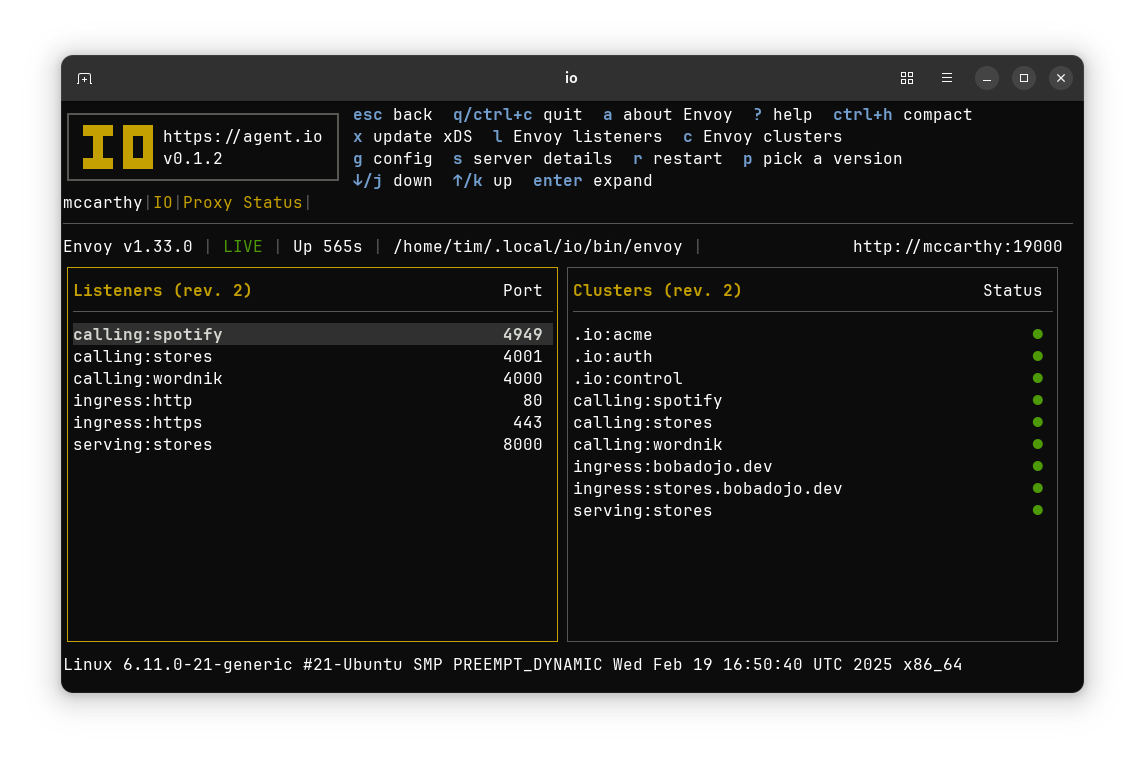

In all three modes, network traffic passes through an instance of Envoy that IO runs as a subprocess. Behind the scenes, IO controls this Envoy using Envoy APIs that include Envoy’s Cluster Discovery Service (CDS), Listener Discovery Service (LDS), and External Processing (ext_proc).

The terminal user interface allows users to configure and observe IO as it controls Envoy and manages network traffic. The TUI is, of course, optional: in production use, IO runs in the background and makes the TUI available over SSH. Configuration and traffic observations are stored in a local database and remote monitoring and logging can be provided using Open Telemetry. When IO needs secrets, they can be stored in IO’s local database or obtained from HashiCorp’s Vault.

How IO is Used#

Each of IO’s modes can be used independently and they are also composable. One possible configuration uses IO to wrap an application like this:

Here remote API consumers call the application through an IO ingress that provides HTTPS and an IO serving interface that provides user authentication, rate limiting, and quota management. The application calls services that it uses through an IO calling interface that adds credentials needed by those upstream dependencies.

Each IO interface is optional and each provides capabilities that might otherwise be part of an application or operating environment.

Simplify and Secure Your Applications with IO#

IO can manage all of an application’s API traffic, allowing applications to be simpler and more secure. IO allows you to connect your applications to the outside world through doors that you control and observe with powerful API management features and the proven performance and reliability of Envoy.

Calling Mode in Depth#

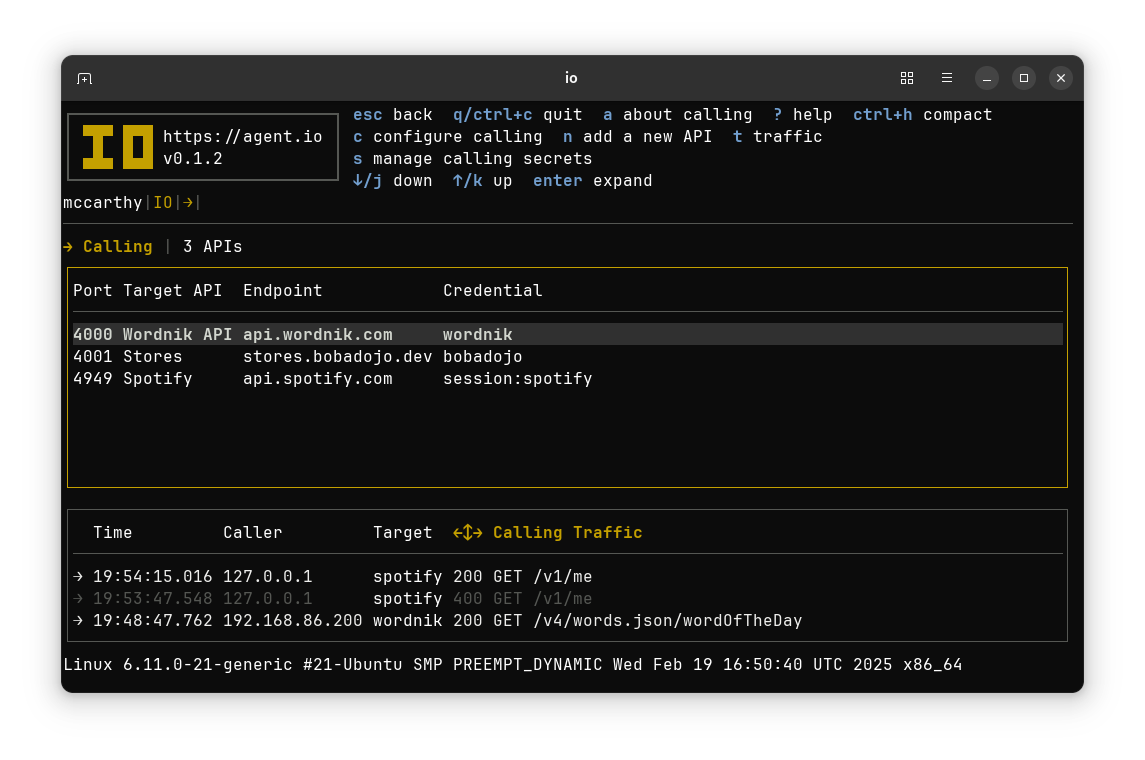

IO’s Calling Mode provides a forward proxy that applications can use to easily and securely make requests to remote APIs.

In Calling Mode, applications send API requests to IO. IO modifies these requests, adding tokens to authenticate them, and then IO forwards the modified requests to the appropriate servers.

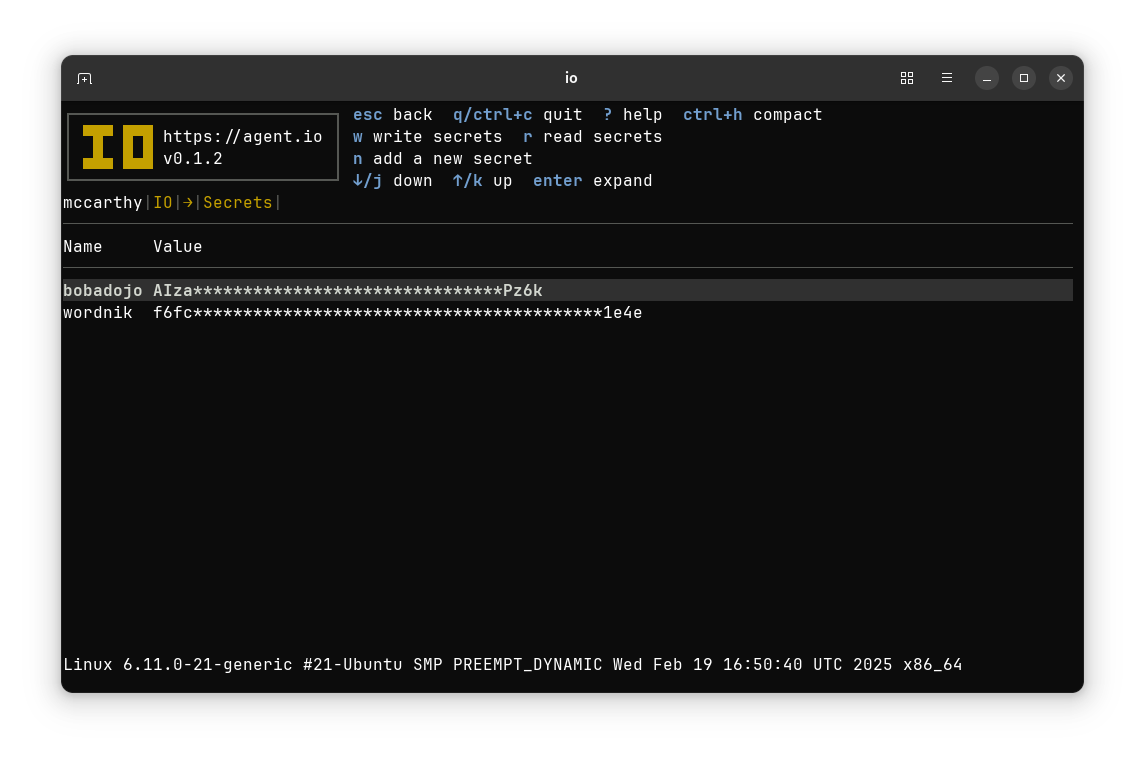

Calling Mode handles all API credentials, allowing applications to be written with no secrets and no risk of leaking secrets in source code or other developer-managed repositories. Secrets can be stored locally within IO or obtained from remote secrets managers like HashiCorp’s Vault. Because IO handles authentication, application code doesn’t have to do anything to authenticate and never even sees authentication credentials, keeping secrets safe from untrusted applications.

Calling Mode can record traffic. Requests and responses can be reviewed to audit applications and replayed to test both calling code and the upstream services being called.

Finally, Calling Mode can provide fine-grained controls over an API’s usage that go beyond scopes provided by upstream API providers. IO users can use operation lists to limit applications to only approved API operations, and application usage of APIs can be monitored for auditing.

Serving Mode in Depth#

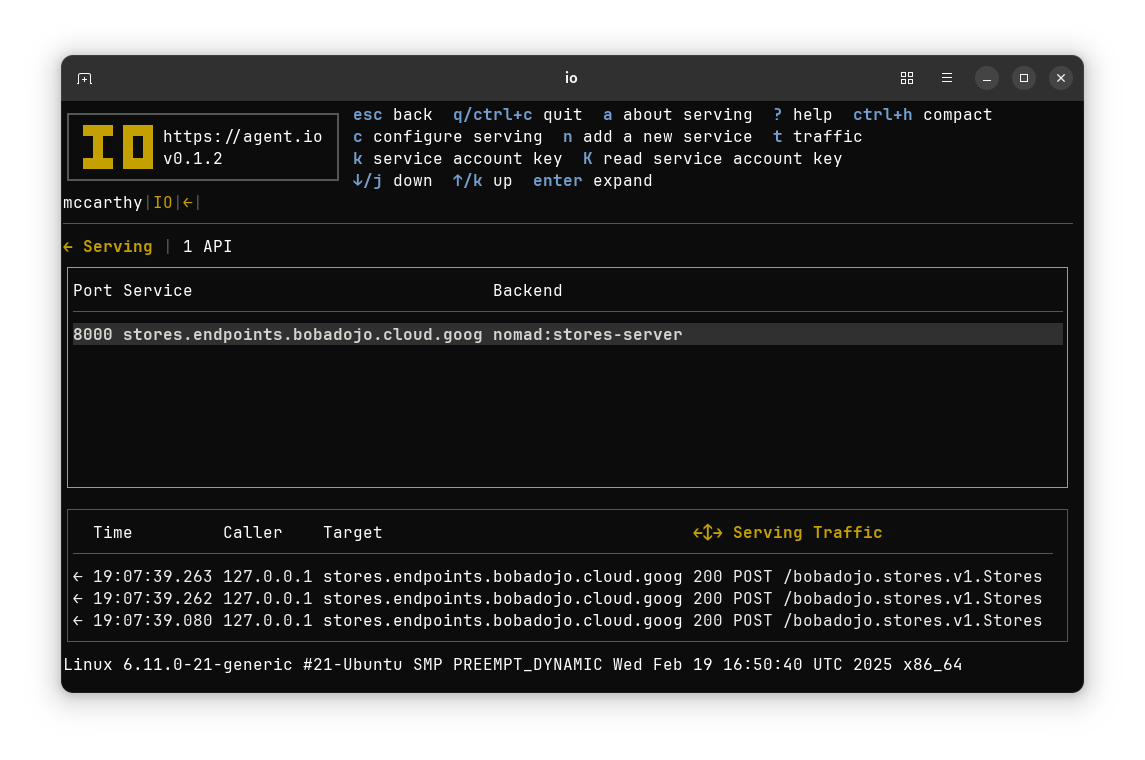

IO’s Serving Mode provides a reverse proxy that observes and secures API calls to an application.

In Serving Mode, IO protects API-serving applications from unauthorized access and ensures that quotas, rate limits, and other constraints on API usage are enforced.

Serving Mode can also be used to enforce HTTP Basic Authentication, to require API keys, or to require AT Protocol identities for backend sites and services that might otherwise be unsecured.

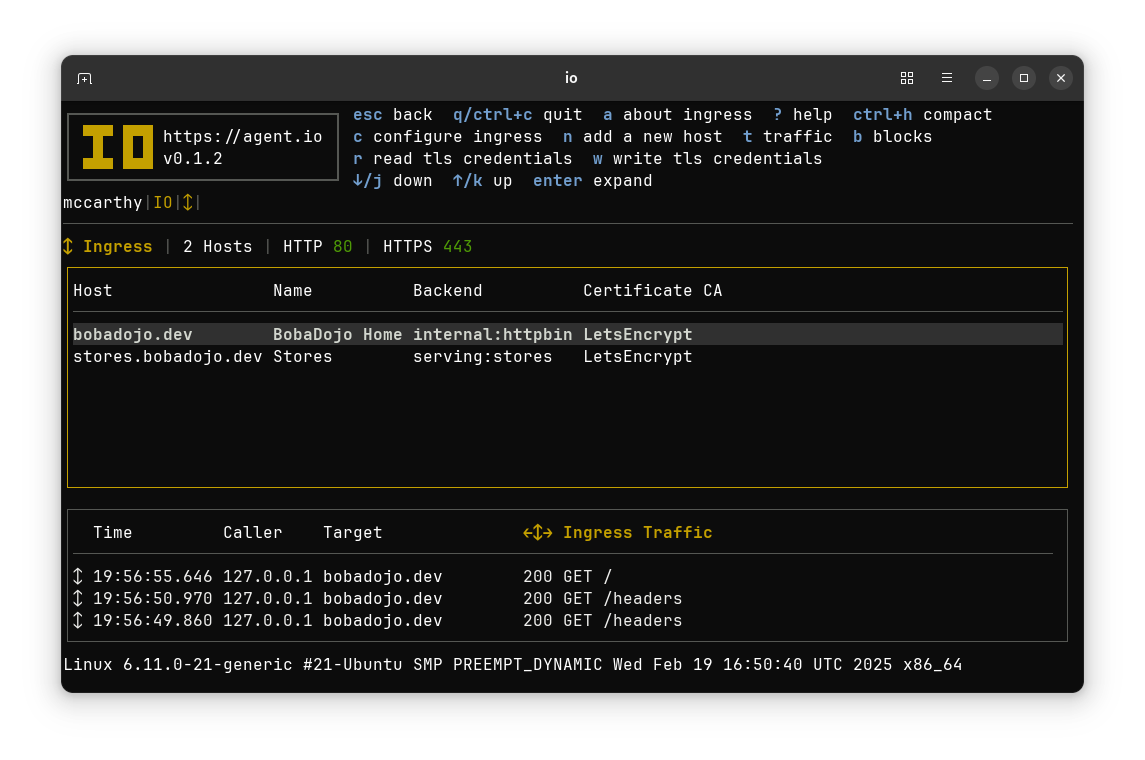

Ingress Mode in Depth#

IO’s Ingress Mode provides a TLS gateway that can be used to call multiple backends.

In Ingress Mode, IO makes backend services publicly available, typically using the public ports for HTTP (80) and HTTPS (443).

Ingress backends can be Calling Mode or Serving Mode interfaces or any other services within network reach of IO. Typically these services are in internal networks and communicate locally using HTTP.

With built-in support for the ACME protocol, IO can automatically obtain certificates from LetsEncrypt and potentially other ACME-compliant providers, making setup and operation of secure HTTPS services a breeze.

IO also can serve as an OAuth client. When it is configured with an OAuth client id, client secret, and other OAuth configuration details, IO can act as a Confidential Client on behalf of a proxied application. It then provides the proxied application with a header value that the application can use to make Calling Mode requests to OAuth provider services on behalf of authenticated users. IO also supports AT Protocol authentication and authorization; details on that will be available soon.

Recording Network Traffic#

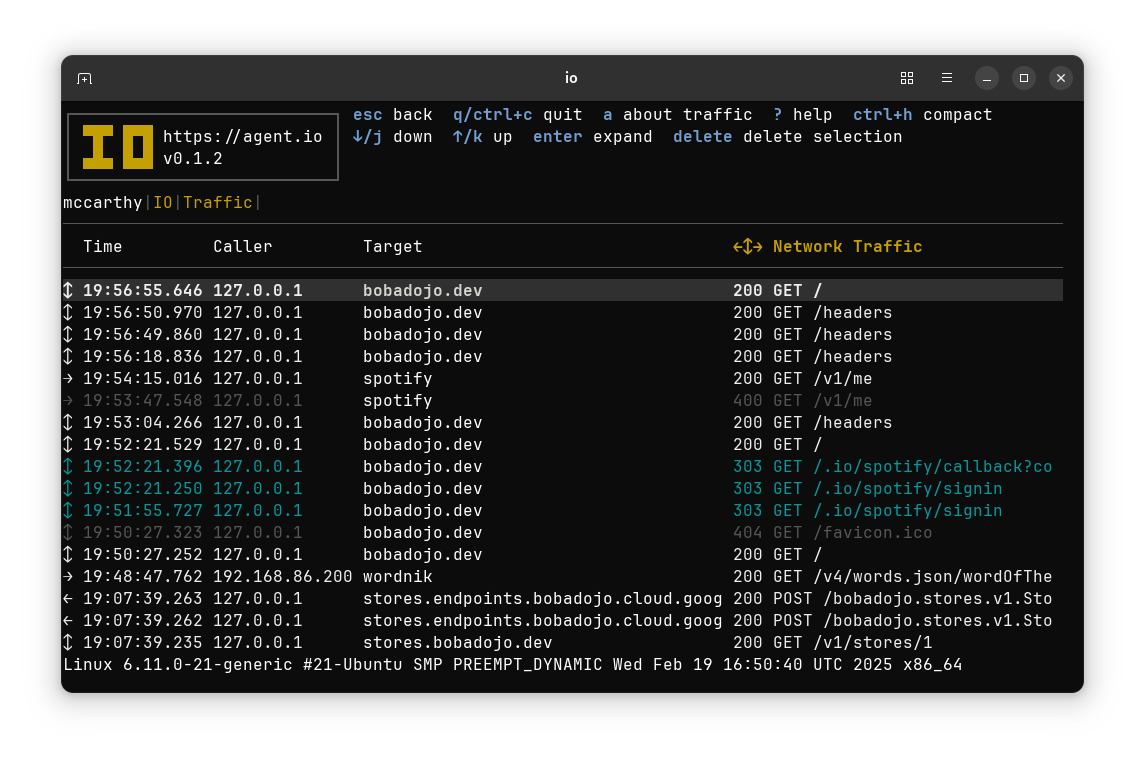

For each of its modes, IO configures Envoy to handle network traffic. By default, this traffic is recorded in IO’s local database and can be viewed in the TUI, where traffic lists use arrows to indicate the type of traffic.

- Right arrows (→) represent calling (forward proxying).

- Left arrows (←) represent serving (reverse proxying).

- Up/Down arrows (↕) represent ingress (aka “north/south” traffic).

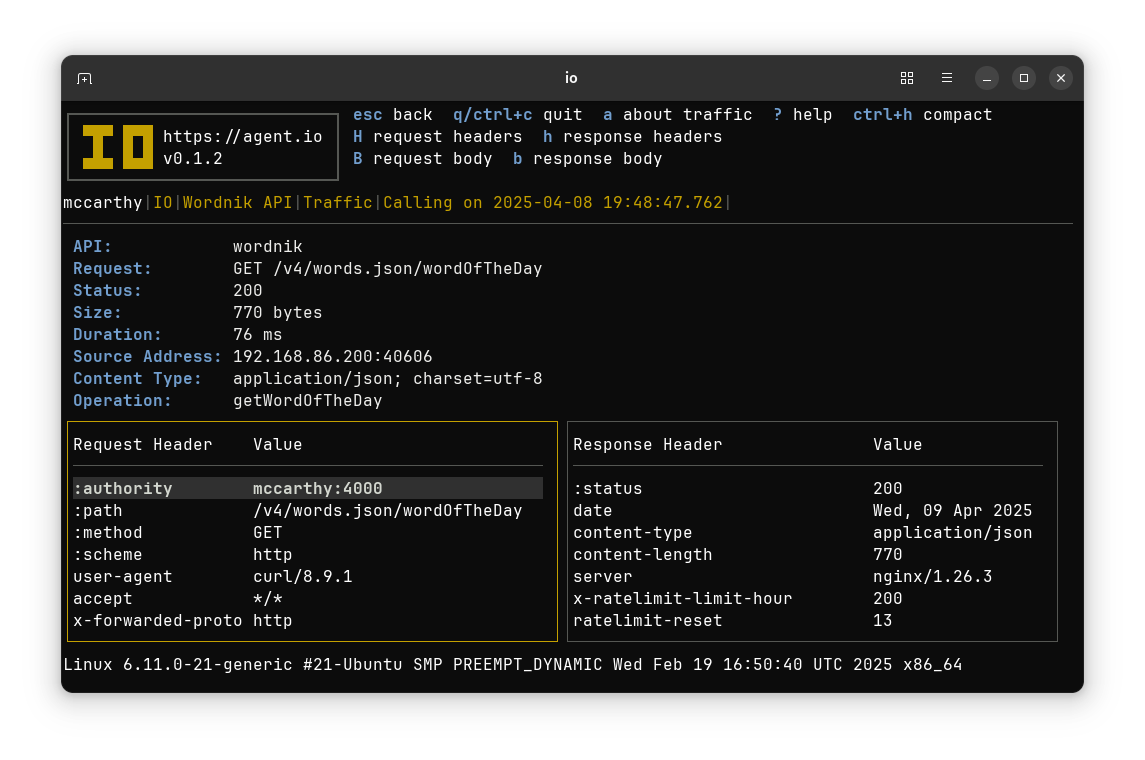

You can interactively select individual items to see traffic details that include the headers of requests and responses.

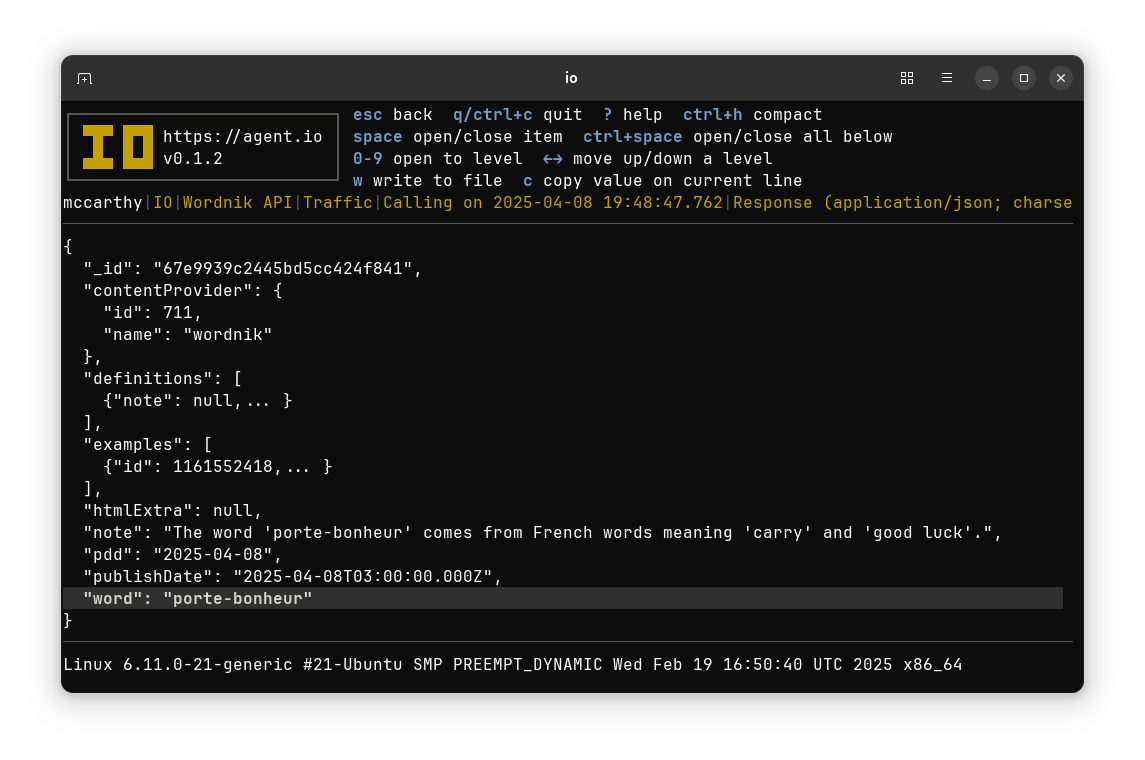

From there you can also go to details screens to view request and response bodies.

Configure IO Interactively or with HCL#

When it is started with the -i (--interactive) flag, IO runs with its interactive terminal user interface, which guides configuration and operation. Configuration can be imported and exported as HCL that can fully control IO. In production (and by default), this same user interface is available over SSH and configuration can be read and written using SFTP.

IO’s configurability is rapidly growing. Here are a few snippets to show what’s already possible.

Calling Configuration#

calling "wordnik" {

name = "Wordnik"

port = 4003

target = "api.wordnik.com"

apply_query "api_key" {

secret = "wordnik"

}

}

This configuration configures IO to call the Wordnik API. Local clients send uncredentialed requests to port 4003, and IO adds the secret named wordnik from its local store to requests using a query parameter named api_key. Not shown: much of this information can be read from the Wordnik OpenAPI description, and when IO imports OpenAPI, it also builds a list of operations that can be used to allow specific requests and block all others.

Serving Configuration#

serving "stores" {

name = "Boba Dojo Stores"

port = 8008

backend = "nomad:stores-server"

grpc_json {

services = ["bobadojo.stores.v1.Stores"]

}

}

This configuration configures IO to serve the Boba Dojo Stores API, a sample gRPC API that demonstrates Envoy’s and IO’s strength at gRPC API management. Using gRPC Transcoding, this API is also available as an HTTP/JSON service, and using additional configuration, requests can be checked for valid API keys and tracked with Open Telemetry. Note that the backend can be specified as an address but here the nomad:stores-server string tells IO to look up the address of the stores-server job in a local Nomad cluster (reachable at the address specified by the NOMAD_ADDR environment variable).

Ingress Configuration#

http_port = 80

https_port = 443

ingress "stores.bobadojo.io" {

name = "stores"

backend = "serving:stores"

}

This configuration configures IO to serve HTTP and HTTPS for the stores.bobadojo.io hostname, forwarding requests to the serving mode interface specified above. As above, backends can also be network addresses or Nomad jobs.

ingress "test.bobadojo.io" {

backend = "internal:httpbin"

oauth_client "google" {

client_id = REDACTED

client_secret = REDACTED

authorize_url = "https://accounts.google.com/o/oauth2/auth"

access_token_url = "https://accounts.google.com/o/oauth2/token"

scopes = ["profile", "email"]

}

oauth_client "spotify" {

client_id = REDACTED

client_secret = REDACTED

authorize_url = "https://accounts.spotify.com/authorize"

access_token_url = "https://accounts.spotify.com/api/token"

scopes = [

"user-modify-playback-state",

"user-read-currently-playing",

"user-read-email",

]

}

}

Here’s another host configuration. This one configures IO to perform OAuth login and token management for the Google and Spotify OAuth providers. When IO forwards requests to the backend (internal:httpbin), it sends the proxy-session header, which backends can send back to IO when they make Calling Mode requests to the enabled Google and Spotify APIs. IO adds the authenticated user’s tokens to the calling requests, and the applications never see these tokens or have to worry about storing or refreshing them.

Keep Secrets Where They Belong: Out of Your Applications!#

Secrets managers keep secrets safe until the moment when they give them to applications to use. After that, anything can happen. An application can write secrets to log files, use them inappropriately, or even leak them through deliberate side channels. With IO, you can keep secrets out of applications and save your applications the trouble of integrating secrets manager SDKs (leave that to IO).

View Envoy Configurations#

For Envoy dilettantes, IO includes a screen that shows details of the current Envoy configuration, focusing on listeners and clusters, the “ins” and “outs” of a working Envoy.

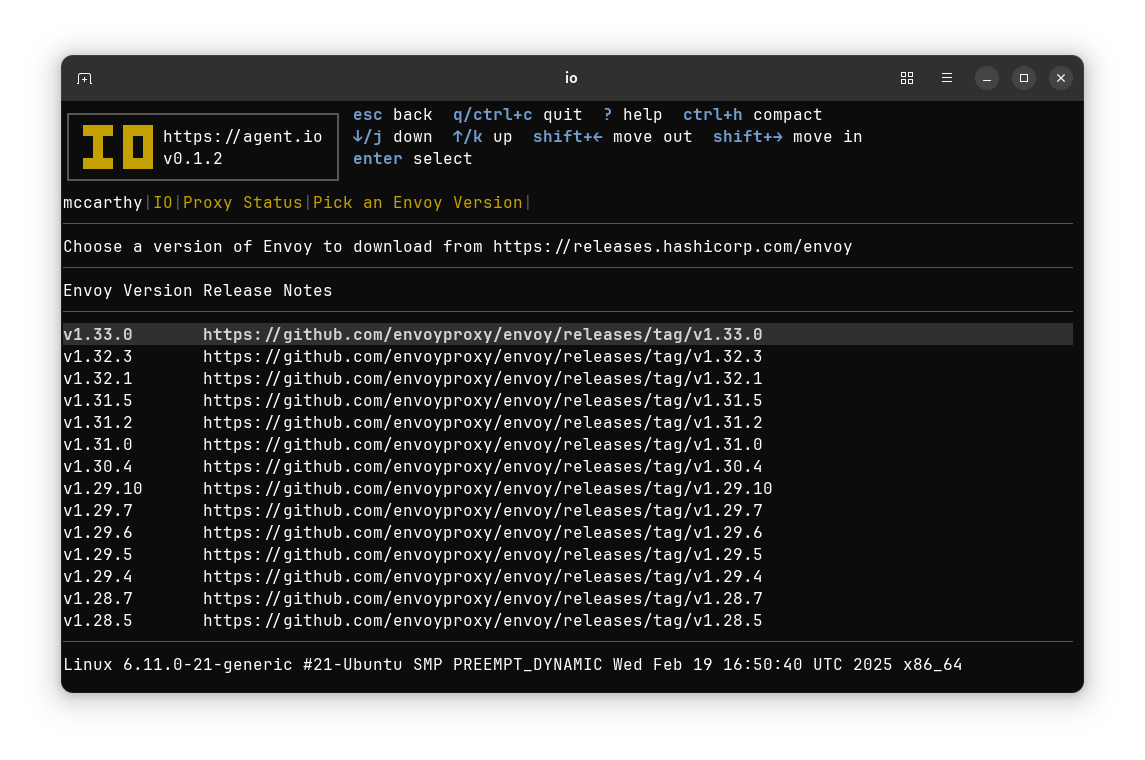

Bring Your Own Envoy or Fetch One with IO#

IO uses the PATH environment variable to find the locally-installed Envoy. When no local Envoy is available, interactive IO users can fetch and use Envoy release builds. But that’s not necessary when you use an IO container build; the official IO container images are standard Envoy container images (envoy/envoy-distroless) with an added IO binary.

Supported Platforms#

IO is developed and verified on Ubuntu Linux for the amd64 architecture.

Availability#

IO is available in a limited private preview. Follow @agent.io for news.